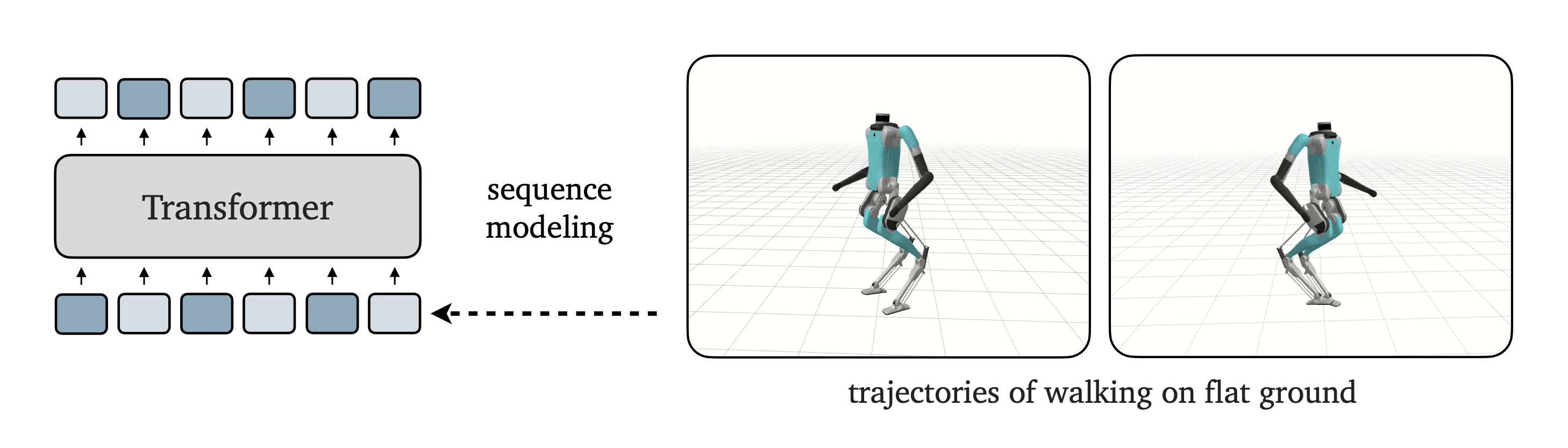

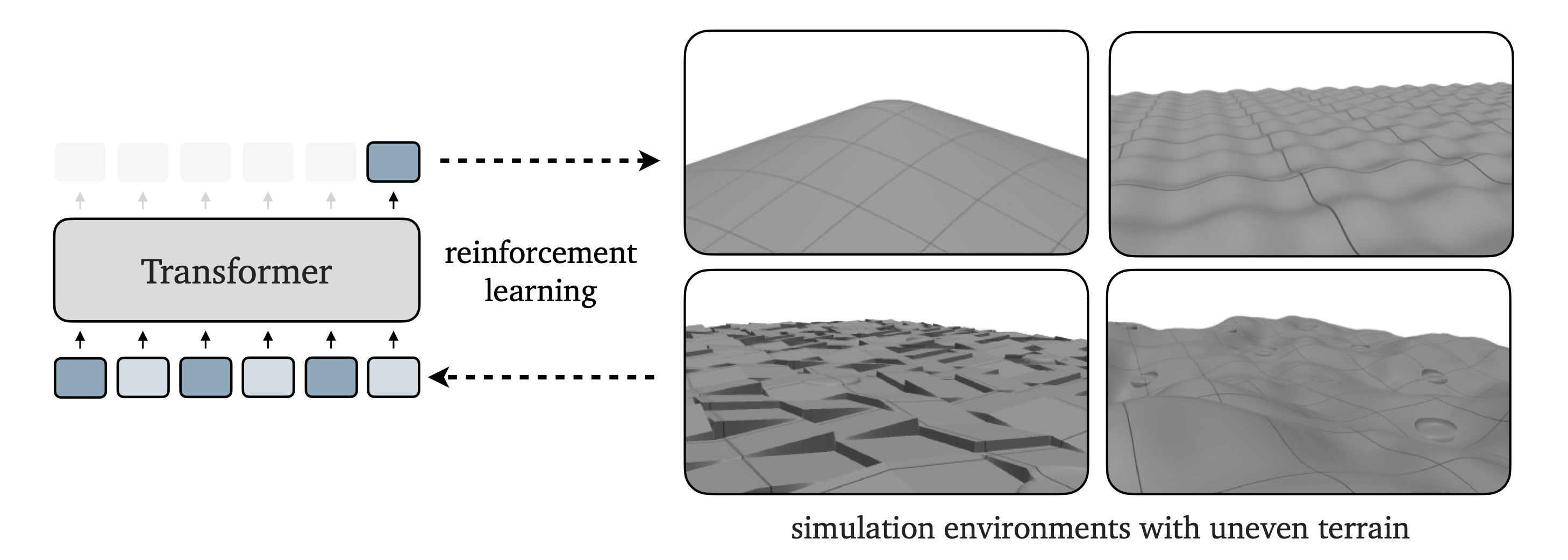

We propose a method for learning humanoid locomotion over challenging terrain. Our model is a transformer that predicts the next action from the history of proprioceptive observations and actions. Our method involves a two-step training procedure. First, we pre-train the model with sequence modeling on a dataset of flat-ground trajectories. We construct a dataset using a prior neural network policy, a model-based controller, and human motion sequences. Second, we fine-tune the model with reinforcement learning on uneven terrains in simulation. Pre-training enables the model to vicariously absorb the skills from prior data and provides a good starting point for learning new skills efficiently. We call our model Humanoid Transformer 2, or HT-2 for short.

Pre-training with Sequence Modeling

Our core observation is that even though the capabilities that we want to learn, in this case humanoid locomotion over challenging terrain, are not readily available in the form of data, we can still leverage the sources of available data to learn relevant skills. Intuitively, a robot that already knows how to walk on flat ground should be better at learning how to walk up a slope, than a robot that cannot walk at all. Pre-training a humanoid model on existing trajectories is similar in spirit to pre-training a language model on the text from the Internet.

Fine-tuning with Reinforcement Learning

After pre-training on a dataset of trajectories, we obtain a model that is able to walk. However, since the model was trained on flat-walking trajectories its capabilities are limited mostly to gentle terrains. For example, the model cannot walk on steep slopes or terrains that are far from the pre-training dataset. Nevertheless, as our experiments show, the pre-trained model can serve as an effective starting point for acquiring new capabilities through interaction.

Berkeley Hikes

To evaluate our approach in real-world scenarios, we perform two case studies. In the first study we take our robot to five different hikes in the Berkeley area. These are normal human hikes that are collectively about 4.3 miles long. Our policy was able to successfully complete all of the hikes.

Hiking in Berkeley.

San Francisco Streets

In our second real-world study, we evaluate our controller on steep streets in San Francisco. Note that these streets are very steep and hard even for healthy human adults. Moreover, some of these streets are considerably steeper than seen during training (up to 20% grade). For example, the street shown in the top-right corner below has a slope grade of 31% which makes it among some of the steepest streets in San Francisco.

Walking on steep streets in San Francisco.

Albany Beach

Recall that we fine-tune the model in simulation only on rigid terrains, without soft or deformable terrains. Nevertheless, as demonstrated in our results, models fine-tuned in this way can generalize to unseen real-world terrains during deployment. We show example videos of our robot walking on a sand beach below.

Walking on sand.

Push on Uneven Terrain

In addition to adapting to terrain changes, a strong controller should also be able to handle external disturbances. We show an example where we apply strong push while the robot is on uneven grass terrain. We see that the model quickly adapts its gait to prevent a fall and maintain balance.

External disturbances.

BibTeX

@article{HumanoidTerrain2024,

title={Learning Humanoid Locomotion over Challenging Terrain},

author={Ilija Radosavovic and Sarthak Kamat and Trevor Darrell and Jitendra Malik},

year={2024},

journal={arXiv:2410.03654}

}